experiments with kinect

2020 | Processing sketch, Kinect

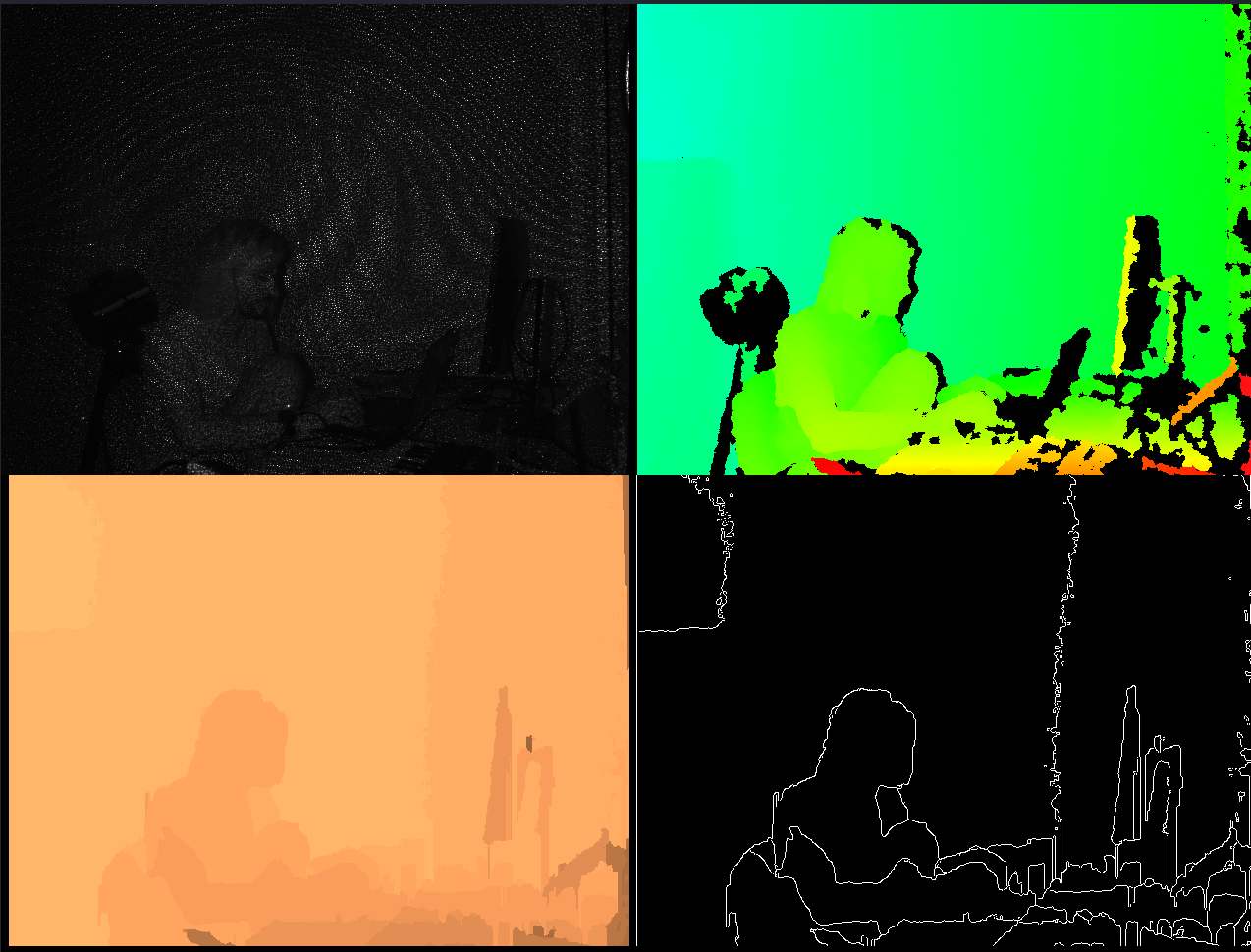

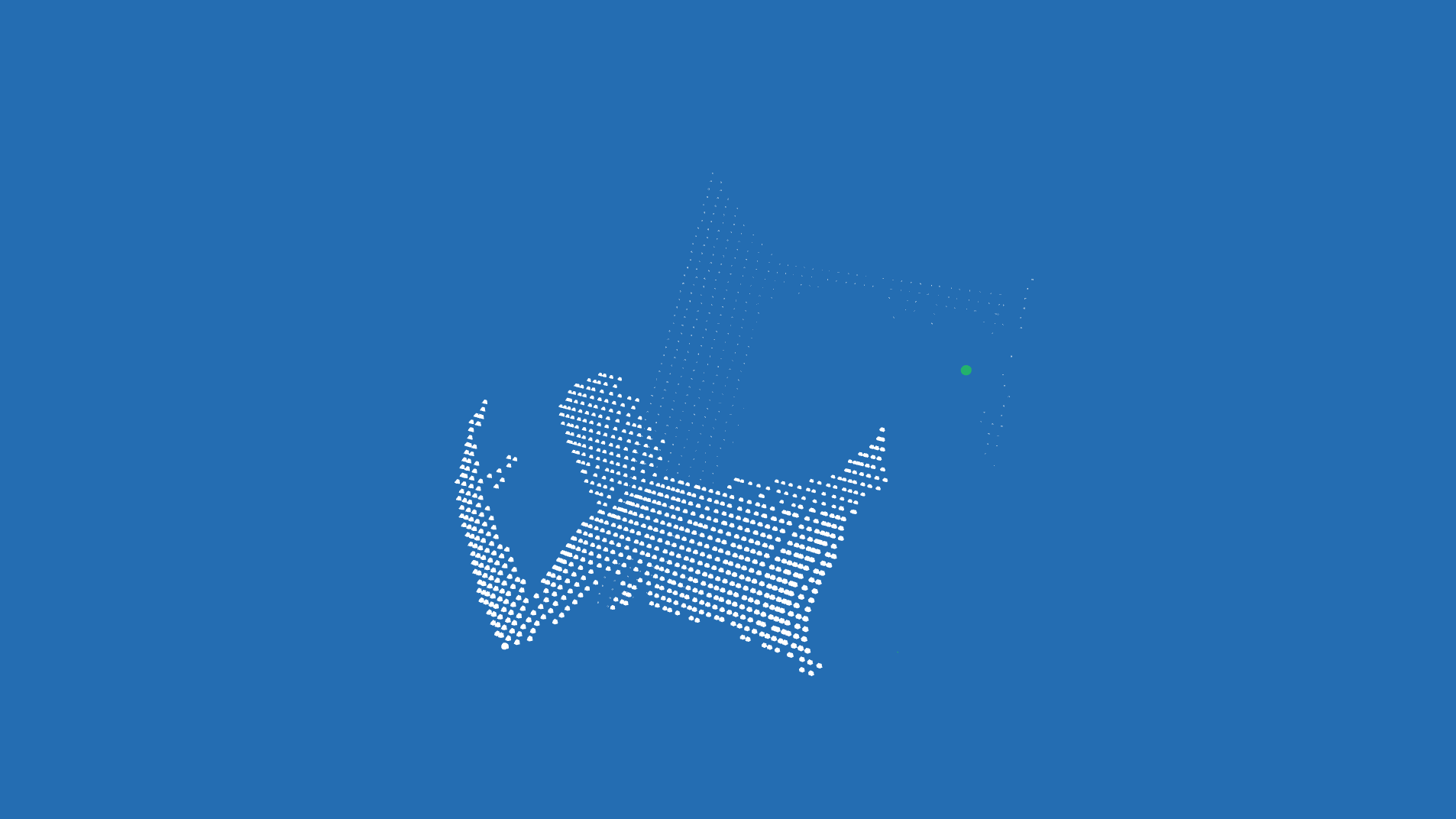

This project is an exploratory experiment with the 3D camera Kinect and MIDI signals. I wanted to create a visual backdrop to musical performances which is reacting to the movement of the artist and their music. First a depth image recorded by the Kinect camera is converted into a 3D point cloud. This point cloud is then projected into a virtual empty space. Objects are spawned at these points and morph and stretch depending on the ambient noise. The observer is floating through the space in random trajectories. On a key change via MIDI the origin and direction of the flight, shape of objects and colors and lighting of the various elements change.

As I didn't know about TOUCHDESIGNER or openFrameworks, this project was painstakingly cobbled together with libfreenect, Processing, Pure Data and some finicky MIDI piping.